BSOD after enabling S2D on R730xd with RAID1 setup on OS disk

So we got our hardware and got busy. First up was testing if the S130 RAID1 setup could perform enough because there were some rumours the software RAID on the S130 was terrible. I did a quick test with CrystalDiskMark. I compared to a few systems, both R630 (one with SAS and SSD) and the figures did seem acceptable. This was just for an indication, but it was enough for me to be put me at ease for now.

So we went forward, updated all the firmware using the Dell Lifecycle controller, created the network config, configured RDMA, disabled IPv6 like on our other servers, fixed wsus (it needed KB4022715 to get it to detect wsus btw), configure the VMQ settings, test/configure the cluster, etc. After our basic config we could finally issue the following command;

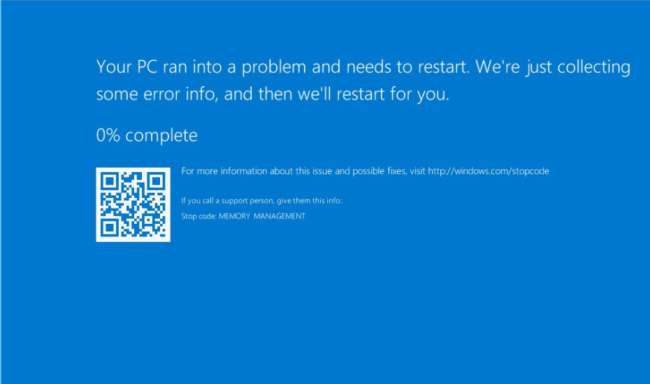

Enable-ClusterStorageSpacesDirectBut after a reboot we got a BSOD with: “Stop code: MEMORY MANAGEMENT”

We couldn’t get the system back to work, F8 save mode did not work. The only option was to use the “last known good configuration” option but as that overwrote the logs we couldn’t found out what happened. The other nodes did not have any errors but all had BSOD’s after rebooting. Funnily enough we could prevent the BSOD by issuing “Disable-ClusterS2D” on the nodes that hadn’t rebooted yet.

Re-enabling S2D by running the command in -verbose mode yielded no results either;

PS C:\Windows\system32> Enable-ClusterStorageSpacesDirect -verbose

VERBOSE: 2017/06/23-16:30:22.057 Ensuring that all nodes support S2D

VERBOSE: 2017/06/23-16:30:22.073 Querying storage information

VERBOSE: 2017/06/23-16:30:33.972 Sorted disk types present (fast to slow): NVMe,SSD,HDD. Number of types present: 3

VERBOSE: 2017/06/23-16:30:33.972 Checking that nodes support the desired cache state

VERBOSE: 2017/06/23-16:30:33.988 Checking that all disks support the desired cache state

Confirm

Are you sure you want to perform this action?

Performing operation 'Enable Cluster Storage Spaces Direct' on Target 'S2D01'.

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is "Y"):

VERBOSE: 2017/06/23-16:31:01.478 Creating health resource

VERBOSE: 2017/06/23-16:31:01.571 Setting cluster property

VERBOSE: 2017/06/23-16:31:01.571 Setting default fault domain awareness on clustered storage subsystem

VERBOSE: 2017/06/23-16:31:02.712 Waiting until physical disks are claimed

VERBOSE: 2017/06/23-16:31:05.729 Number of claimed disks on node 'Server01': 18/18

VERBOSE: 2017/06/23-16:31:05.729 Number of claimed disks on node 'Server03': 18/18

VERBOSE: 2017/06/23-16:31:05.744 Number of claimed disks on node 'Server04': 18/18

VERBOSE: 2017/06/23-16:31:05.744 Number of claimed disks on node 'Server02': 18/18

VERBOSE: 2017/06/23-16:31:05.760 Node 'Server01': Waiting until cache reaches desired state (HDD:'ReadWrite' SSD:'WriteOnly')

VERBOSE: 2017/06/23-16:31:05.776 SBL disks initialized in cache on node 'Server01': 18 (18 on all nodes)

VERBOSE: 2017/06/23-16:31:05.791 SBL disks initialized in cache on node 'Server03': 18 (36 on all nodes)

VERBOSE: 2017/06/23-16:31:05.791 SBL disks initialized in cache on node 'Server04': 18 (54 on all nodes)

VERBOSE: 2017/06/23-16:31:05.807 SBL disks initialized in cache on node 'Server02': 18 (72 on all nodes)

VERBOSE: 2017/06/23-16:31:05.807 Cache reached desired state on Server01

VERBOSE: 2017/06/23-16:31:05.807 Node 'Server03': Waiting until cache reaches desired state (HDD:'ReadWrite' SSD:'WriteOnly')

VERBOSE: 2017/06/23-16:31:05.854 Cache reached desired state on Server03

VERBOSE: 2017/06/23-16:31:05.885 Node 'Server04': Waiting until cache reaches desired state (HDD:'ReadWrite' SSD:'WriteOnly')

VERBOSE: 2017/06/23-16:31:05.932 Cache reached desired state on Server04

VERBOSE: 2017/06/23-16:31:05.932 Node 'Server02': Waiting until cache reaches desired state (HDD:'ReadWrite' SSD:'WriteOnly')

VERBOSE: 2017/06/23-16:31:05.979 Cache reached desired state on Server02

VERBOSE: 2017/06/23-16:31:05.979 Waiting until SBL disks are surfaced

VERBOSE: 2017/06/23-16:31:09.026 Disks surfaced on node 'Server01': 72/72

VERBOSE: 2017/06/23-16:31:09.073 Disks surfaced on node 'Server03': 72/72

VERBOSE: 2017/06/23-16:31:09.120 Disks surfaced on node 'Server04': 72/72

VERBOSE: 2017/06/23-16:31:09.167 Disks surfaced on node 'Server02': 72/72

VERBOSE: 2017/06/23-16:31:12.599 Waiting until all physical disks are reported by clustered storage subsystem

VERBOSE: 2017/06/23-16:31:16.636 Physical disks in clustered storage subsystem: 72

VERBOSE: 2017/06/23-16:31:16.636 Querying pool information

VERBOSE: 2017/06/23-16:31:17.011 Starting health providers

VERBOSE: 2017/06/23-16:33:51.265 Creating S2D pool

VERBOSE: 2017/06/23-16:33:57.748 Checking that all disks support the desired cache stateWe tried a lot, firstly to check all available firmware updates and drivers, but all seemed in order. Checked the hardware, but as all nodes would BSOD’d after a reboot we considered a memory failure to be unlikely. Disabled the RDMA cards, installed the Mellanox drivers instead of the Dell drivers, etc.

We had a gut feeling that it might had to do with the RAID1 setup of the S130 so we made some backups of the system and reinstalled the system with AHCI enabled on the S130 instead of RAID1. We repeated the same config and ran the command Enable-ClusterStorageSpacesDirect and prayed.. And guess what, everything rebooted with no problem!

I’m gonna try to speak to the engineer who assured me this was a supported configuration in the Dell R730xd and S2D, hopefully he can tell me some useful things. On the Dell Tech center is a document who shows this to be a supported configuration too;

But maybe I’ve understood them wrong as the firmware document mentions a HBA330 controller instead of the S130.. And the deployment guide too;

Which would explain why I was surprised to have to resort to Software RAID. But we haven’t found a RAID HBA330 controller, but maybe we did things wrong. I’m gonna find out!

Update 20170801: there is a private fix from Dell, request it through dell support, read more about it here.

Btw, if you need to re-create the S2D config (as we had to do multiple times) you need to clear the configuration. Which means;

- Remove the storage from the Failover Cluster manager

- Remove the storage pool;

Get-StoragePool | ? IsPrimordial -eq $false | Set-StoragePool -IsReadOnly:$false

Get-StoragePool | ? IsPrimordial -eq $false | Get-VirtualDisk | Remove-VirtualDisk

Get-StoragePool | ? IsPrimordial -eq $false | Remove-StoragePool -Confirm:$false- Wipe disks

Get-PhysicalDisk | Reset-PhysicalDisk

Get-Disk | ? Number -ne $null | ? IsBoot -ne $true | ? IsSystem -ne $true | ? PartitionStyle -ne RAW | % {

$_ | Set-Disk -isoffline:$false

$_ | Set-Disk -isreadonly:$false

$_ | Clear-Disk -RemoveData -RemoveOEM -Confirm:$false

$_ | Set-Disk -isreadonly:$true

$_ | Set-Disk -isoffline:$true

}- Reboot the nodes (I think the failover cluster manager needs to free the claim on the storage, a service restart may be enough).