some things I learned ordering a S2D configuration

So, It’s been a while.. We were busy getting the hardware in, but that seems to be a bit more trouble than normal, it took us 3 months due to the lack of details on technet and 3 months getting all the details from Dell! Time to write an update on the things I learned.

There were some open questions from my post about the S2D technet update, and this post will go into some information I got from Dell.

- I asked about the Dell S2D appliances, but our contact at Dell says he doesn’t know any such device. I did get to hear that the 14G servers (mid-2017)will all be S2D certified. As some of the 14G servers will support more than 3Tb of mem and 24x NVMe, I’m curious what the possibilities will bring.

- There are some new reference designs besides the R730xd, the R630, R730 are mentioned. If i get a hold on them, I will post them here.

- I haven’t got official confirmation that the drives I choose (1.6Tb Intel S3610 and 1.8TB SAS disks 4K) can be updated through powershell. By asking again I got confirmation that the drives can be updated individually through powershell.

- So I mentioned this in my previous blog;

"Seperate boot device. This is interesting, because selecting the HBA330 means there is no RAID controller available (not supported in S2D, see the line above) for the two disks in the flex bays. So how do I set a RAID1 mirror? Do I need to use Windows Disk management to create a software mirror? Not very appealing. I’ve asked Dell about this and got the following answer;"

Choosing the HBA330 (required because RAID HBA is not supported!) would connect all disks, including the 2 disks in the flexbay drives. The solution is to connect the flexbays to the on board PERC controller so RAID1 can be set. This worked, but it gave warnings and didn’t have official Dell support. The reference archictecture mentioned in my previsous S2D blogs mentions 1 disk because of this. This should all be resolved (they said the order system would allow this from 20th of february 2017), so the OS/boot disks can be set in RAID1 with the onboard PERC, without warnings and official support. I will confirm as soon as I have the hardware.

- Dell support did mention other things as a required;

"We require same processor model, same SSD’s/HDD’s, endurance/capacity and type, same NICS and memory

But not the same manufacturer of disks but the same type (SATA SSD/HDD, same sector size 512,

Same capacity and performance,) we cover this by providing the tested drive and alternative

That is supported" - Dell gave the the following specs for the 1.6Tb Intel S3610 SSD disks. They seem to be within the 5+ DWPD guidelines on technet;

1.6TB Solid State Drive SATA Mix Use MLC 6Gpbs 2.5in

Capacity: 1.6TB

Speed: Solid State Memory

Interface Types: SATA

Form Factor: 2.5inx15mm SFF Server Drive

Encryption/SED Supported

Sector Size: 512 / 512e

Sustained Throughput: 1900 / 850

Average Seek Time: 115 μs

Electrical Interface: SAS-3 Serial Attached SCSI v3 – 6Gbps

SSD Type: MLC

SSD Endurance DWPD: 4-15 Ent. Mixed Use

- But the Dell solutions architect told me we could use the PM1635a, which are actually read optimized and have a lower DWPD (1-3) and are much cheaper. I asked if i wouldn’t get into any problems with Dell support, but he guaranteed this not to be the case.. So who am I to argue..?

- I got the specs from the Intel P3700 NVMe too, so let’s share that too;

2TB INTEL PCIE

Capacity: 2TB

Speed: Solid State Memory

Interface Types: PCIe

Form Factor: Half-Height/Half-Length

Sector Size: 512 / 512e

Sustained Throughput: 2800

Electrical Interface: PCIe – v3.0 – 126GBps

SSD Type: MLC

SSD Endurance DWPD: 16-35 Ent. Write Intensive

However, it seems the P3700 is labelled EOL although it’s still configurable.

- So we decided to use the Dell (actually Samsung) PM1725. These are actually not add-in cards, but frontloaded cards (which are slower!)

Capacity: 800GB, 1.6TB, 3.2TB and 6 .4TB 1 (PM1725)

Interface: PCIe Gen3 x4 (2.5”) & PCIe Gen3 x8 (Add-In-Card)

Sequential read/write:

Add In Card: Up to 6.0/2.0 GB/s (with 128Kb transfer size)

2.5” – Up to 3.1/2.0 GB/s. (with 128Kb transfer size)

Random read/write:

650,000+/60,000 IOPs – 800GB – 2.5” (with 4Kb transfer size)

700,000+/120,000 IOPs 3 – 1.6TB/3.2TB – 2.5” (with 4Kb transfer size)

800,000+/120,000 IOPs 3 – 1.6TB/3.2TB/6.4TB – Add in card (HHHL)

Latency:

Read 85us (with Qeueu Depth = 1)

Write 20us (with Qeueu Depth = 1)

Active power consumption: 25W maximum

- But these had really long delivery times (55 working days), so we decided to get the P3600 series, specs can be found here

I haven’t ever done sooo much research before ordering our hardware and software (this means you Microsoft). But we have finally ordered our hardware. I decided on 4 node cluster, based a hyper-v converged solution based on;

PowerEdge R730xd

1x PE R730/xd Motherboard MLK

2x Intel Xeon E5-2650L v4 1.7GHz,35M Cache,9.6GT/s QPI,Turbo,HT,14C/28T (65W) Max Mem 2400MHz

1x R730/xd PCIe Riser 2, Center

1x R730/xd PCIe Riser 1, Right

1x Chassis with up to 24, 2.5 Hard Drives and 2, 2.5″ Flex BayHard Drives

1x DIMM Blanks for System with 2 Processors

1x Performance Optimized

1x MOD,INFO,ORD-ENTRY,2400,LRDIMM

8x 64GB LRDIMM, 2400MT/s, Quad Rank, x4 Data Width

2x Standard Heatsink for PowerEdge R730/R730xd

1x iDRAC8 Enterprise, integrated Dell Remote Access Controller, Enterprise

4x 1.6TB Solid State Drive SATA Mix Use MLC 6Gpbs 2.5in Hot-plug Drive, PM1635a

12x 1.8TB 10K RPM SAS 12Gbps 4Kn 2.5in Hot-plug Hard Drive

2x 200GB Solid State Drive SATA Mix Use MLC 6Gpbs 2.5inFlex Bay Drive,13G, Hawk-M4E

2x Intel 2TB, NVMe, Performance Express Flash, HHHL Card, P3600, DIB

1x PERC HBA330 12GB Controller Minicard

2 C13 to C14, PDU Style, 10 AMP, 6.5 Feet (2m), Power Cord

1x Dual, Hot-plug, Redundant Power Supply (1+1), 1100W

1x PowerEdge Server FIPS TPM 2.0

1x Mellanox Connect X3 Dual Port 10Gb Direct Attach/SFP+ Server Ethernet Network Adapter

1x Intel Ethernet X540 DP 10Gb BT + I350 1Gb BT DP Network Daughter Card

1x No RAID for H330/H730/H730P (1-24 HDDs or SSDs) Software

1x Performance BIOS Settings

1x OpenManage Essentials, Server Configuration Management

1x Electronic System Documentation and OpenManage DVD Kit for R730 and R730xd Service

1x Deployment Consulting 1Yr 1 Case Remote Consulting Service

We wanted to use our own networking switches for now, these are Dell N4032 and have the possibility to use the 40Gb QSFP+ modules (figure 6 on page 11 of the getting started manual) and to use the quad breakout cables (figure 8 on page 12). But it was advised to use the S4048 because of the reference designs and the larger buffers on the S-type switches. This was a bit of a bummer as we actually don’t have any (Q)SPF+ infrastructure because we decided a while back to use the 10Gb-BaseT (like UTP cabling) switches and ordering the S4048 would cost us 25k and would only use 8 ports of the 48ports. So we asked to use the S4048T (which has the same buffersizes as the S4048) and has 48x 10Gb-BaseT and 6x QSFP+ ports which can handle 4x 10Gbit SFP+ per QSFP+ port. So the normal 10Gbit ports on the S4048T can use utp cabling, which is our standard and the QSFP+ ports will be used for;

- a 40Gbit interlink (consumes 1 QSFP+ port per switch)

- a 40Gbit interlink for connection to our N4032 switch.

- a QSFP+ port breakout cable for 4x 10Gbit SFP+ ports for our S2D nodes (4 currently)

- which leaves 3 QSFP+ ports for other uses or more S2D nodes.

So for the networking we ordered;

2x S4048T

3x QSFP+ 40GbE Module, 2-Port, Hot Swap, used for 40GbE Uplink, Stacking, or 8x 10GbE Breakout, Cust Kitbles not included)

2x Dell Networking,Cable,40GbE (QSFP+) to 4x 10GbE SFP+ Passive Copper Breakout Cable, 2 Meter Customer Kit

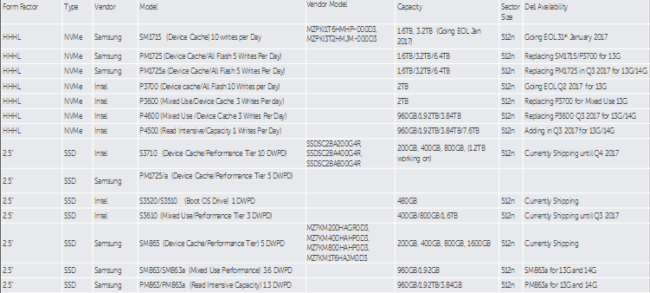

I also got a very usefull details while conversing with the solution architect. Like the availabilty and DPWD of the NVMe’s and if they are installed as SSD or as HHHL (dropin cards);

So, thats what ordering hardware learned me.. glad that’s over! I’ll do a followup as soon as the hardware arrives!